Welcome to my Career Portfolio

Below is my portfolio of projects that I have worked on, and the left side menu links to my Draw3D software and Computer Vision programming tutorials. If you are interested in working with me then feel free to Contact Me / About Me.

My current Resume / CV is available to download here: Resume.pdf

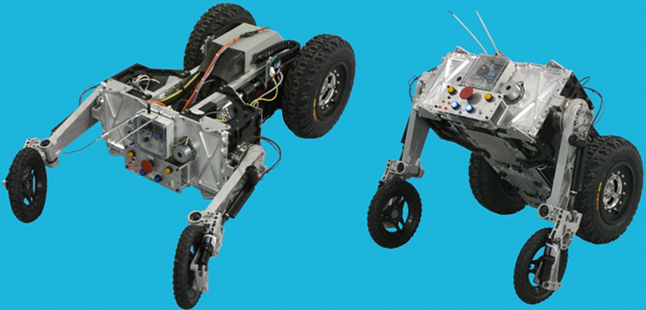

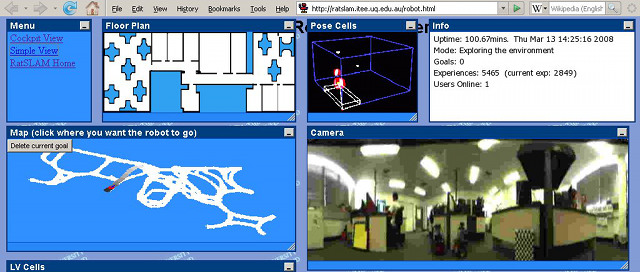

My Masters thesis in Robotics is available to download here:

'A Framework for the Long-Term Operation of a Mobile Robot via the Internet': MastersThesis.pdf